- leaf pages of indexes becoming physically unordered within a given file

so as to no longer match the logical left-to-right linked-list ordering of the pages.

This type of fragmentation as we'll use it here

* relates in no way whatsoever to the physical pages being contiguous

(i.e. back to back, side by side, etc.) within the given file,

but only to the physical order of the pages within the file

compared to the logical order in the linked list;

* the difference between contiguous pages and properly ordered pages

would be that to be contiguous,

the pages must exist physically in side-by-side positions within the file

(i.e. pages 1,2,3,4,5,6,...n) with no gaps in between - to be properly ordered,

the pages must exist simply in logical order and on ever-increasing physical positions

within the file (i.e. pages 10, 17, 22, 23, 28, 42,...n).

Contiguous pages (for purposes of these conversations)

are always also properly ordered pages,

but properly ordered pages aren't necessarily contiguous.

Logical fragmentation also encompasses page density, or how full a page is.

Given that the page is the basic unit of storage for Sql Server,

it is also the basic size of data stored in your data cache

(a subset of your buffer pool, usually the largest subset);

hence,

the more dense the pages are,

the less cache that is either used or needed to read the same amount of data

- the less dense the pages,

the more cache that is needed,

the more pages that need to be read/inspected,

and the more pages that are needed to store the same amount of data.

This particular portion of logical fragmentation

it's really the only kind of "fragmentation" that can actually be helpful in some cases.

On the flip side, it's also the only kind of fragmentation

that will cause performance degradation for data that is in cache and not on disk

(all other types of fragmentation only impact performance

when actual disk IO is incurred - once the data is in cache,

all other types of fragmentation are totally irrelevant).

When talking about reading data, or selecting data,

this type of fragmentation (like all others) is bad,

for all the reasons mentioned above

(i.e. more pages to store the same amount of data,

bloated data cache,

more pages to scan/seek on when looking for records, etc.).

However, since most database systems aren't read-only in nature,

at some point data needs to be written to the database.

When new data is written to the database

and it needs to be placed in a particular location within an index

(determined by the index key(s)),

or if an existing row is updated to contain larger variable-length column values,

that data will need space to reside within the appropriate logical location in the index.

If free space exists on the page

where the existing record already resides,

or on the page where a new record will be inserted into,

then the new data is simply written to that page and life is good.

If the page is completely full

(or full enough to not have the required amount of space for the new data),

then a page split will occur,

a new page will be created,

and then the new data will be written.

Obviously,

this latter type of write operation will be significantly slower than the prior write operation

where free space existed on the page.

This is the case where purposely having a lower page density

and actually introducing some fragmentation into the system will help

- and this is the only kind of fragmentation that ever has a possible up-side.

To show some examples,

the following graphic displays a perfectly contiguous,

perfectly logical ordered index structure:

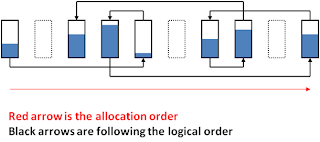

This next graphic displays

what the same structure might look like with pages that aren't full,

and aren't logically ordered, and also aren't contiguous:

what the same structure might look like with pages that aren't full,

and aren't logically ordered, and also aren't contiguous:

Source:

http://www.mssqltips.com/sqlservertip/2262/sql-server-fragmentation-what-it-is-what-types-there-are-part-2-of-9/

http://www.mssqltips.com/sqlservertip/2262/sql-server-fragmentation-what-it-is-what-types-there-are-part-2-of-9/